By Dot Lucci, M.Ed., CAGS

By Dot Lucci, M.Ed., CAGS

Director of Consultation and Psychoeducational Services, NESCA

Artificial Intelligence (AI) is a part of our lives, and we may even be using it ourselves at work, in our cars, and at home. There has been much press about the pros and cons of AI, and it continues to evolve at a rate that our laws can’t keep up with. AI is here to stay and is present in almost all aspects of our lives whether we want it to be or not, or whether we use it deliberately or not. Sometimes, we aren’t even aware of its infiltration into our lives.

So, you’ve heard of AI, but have you heard of character.ai? Do you have an AI companion that keeps you company by chatting with you, helping you with your mental health issues, or brainstorming ideas with you? At this point you may be thinking what is an AI companion? Well, if you are a parent of a teen or young adult or work with teens or young adults, you might want to read on. Many of our teens and young adults have heard of it, and according to Common Sense, it is trending in teens and young adults with 51% having used it at least once; some using it daily as their sole companion (Common Sense, 2024).

Character.ai is an AI tool where you can digitally create a character/persona (avatar) of famous people, historical figures, athletes, politicians, movie characters, anime, gaming worlds, or anyone who’s in your imagination. This is where your ideal friend and companion comes in! Usually, AI characters are positive, supportive, and caring. There are many different AI apps and tools to pick from, such as character.ai, Talkie, CHAI, Replika AI, to name a few. Character.ai differs from regular AI, which is frequently used in professional settings to help create efficiencies in our daily tasks. These character-based tools, however, are built for entertainment, companionship, role playing, storytelling, to play a game with, practice a foreign language, or get feedback and brainstorm different ideas or topics with, etc. These avatars can even be your mental health counselor. You can have a companion/friend at your fingertips who is there to support you at any given moment 24/7 for entertainment or emotional support. In essence, these apps let you talk in real time with whomever you’ve created, and it can carry on realistic conversations based on how you’ve programmed it to “be.”

Character.ai and other similar apps have been trained on large language learning models (LLM) and natural language processing (NLP) as well as deep machine learning (DML), which allows them to create natural, flowing, and engaging conversations. Like all AI, it has been trained on massive amounts of data, and these applications consider context and can predict responses. These apps are programmed to show and sound emotionally concerned. The characters can respond with different tones, word choice, personality traits, and more – all based on what you’ve built into it. Within the privacy settings, you can also have your character be available to others on the platform, so you can essentially create a “community of companions and friends” to engage with. These Ai companions can even talk amongst themselves with you in a group chat and share differing opinions and ideas for you to think about.

Like all digital platforms and apps, there are disclaimers, safety rules, and community guidelines, etc. These AI apps also include disclaimers addressing risks like exposure to hate speech, sexual harassment, self-harm, etc., in their safety rules. However, like all AI and social media, it is not foolproof and can be overlooked or bypassed. Because it is trained with LLM, NLP, and DML, the person who it interacts with it runs the risk of the potential for harmful and inappropriate content being included in its interactions.

Since our legal system has not yet caught up with the rapid-fire developments of the AI space, legal issues have been emerging about the duty of the technology companies to protect the mental health, and the emotional and psychological wellbeing of its users, especially those who are more vulnerable. For younger-aged users, or youth who are naïve, have mental health challenges, have less access to professional counselors, or may be in countries where mental health is not endorsed, this type of AI can have dire consequences if left unchecked. For instance, a mother in Florida filed a lawsuit against character.ai after her teenage son, who was diagnosed with an autism spectrum disorder, took his own life after getting emotionally attached to his character (World Law Group, 2024).

Many users of character.ai and tools with AI chatbots/avatars, allow the characters or avatars to become and serve as their sole friend. Users can develop a strong emotional attachment to their character, which can lead to a dependence and over-reliance on the character. The danger is that some characters may provide inaccurate, harmful, dangerous, or misleading advice, depending on their database, if they are not monitored properly (which many are not), as in the case of the Florida teen. These avatars are only as good as their training, and they are not humans. Needless to say, they are not fully able to understand the complexities of human interactions (and the nuances that come with them) and relationships, particularly in the contexts in which they occur. Becoming more reliant on an AI character can lead to isolation and a decrease in real life social interaction with family and friends. (Science Digest, 2024)

As with all technology, there are advantages and disadvantages to character AI avatars. For many, AI avatars/chatbots can also have positive benefits. For instance, they offer immediate accessibility to a listening ear, a non-judgmental friend, mental health support, and more – all which can quickly turn into cons. They can also be an outlet for creativity, imagination, working out the anxieties of connecting with a person in real life, and helping them practice and try on different ways of interacting.

In the broader context, character AI tools can be used for educational purposes, such as personalizing learning, simulating real-world scenarios, training individuals in various skills, using scientific figures to teach concepts, and more. If used properly, the story-telling and role-playing aspects can be harnessed to create interactive formats.

As with all technology usage, adults should be aware of what teens and young adults are engaged in online. As a parent or caregiver, it is important to stay ahead of this trend and its usage, especially if it is being used as a substitute for human companionship and mental health support. For many young people, the benefits of “having a friend, being seen, heard, and valued” by a chatbot/avatar is better than not having a friend, being seen, heard, and valued in real life. Although there are warnings on all of these platforms that the avatar is not real and that the conversation is computer-generated, it may not matter to the user if it feels real, sounds real, and provides real comfort. For them, it is worth it. This real-time friend, who is non-judgmental, supportive and readily available, is powerful for many teens and young adults.

If you notice a person changing their habits regarding engagement in real life activities, connection with family and friends, retreating/isolating to their room, becoming secretive, or only talking about their AI character, these could all be “red flags.”

Guidelines for Usage of Character-based AI

If you know of a teen who is using character AI, begin by checking in about their app use with a conversation. Approach it with curiosity and openness; not blame and punishment. Ask to see their avatars/characters to see if there is anything potentially harmful about their personalities. Assess whether there is anything beyond their maturity level. Teens and many young adults do not have fully-developed frontal lobes, or critical thinking and decision making skills to use character AI and manage potential harmful content and situations.

Schools are teaching teens about social media, internet safety, privacy, etc.; parents and caregivers must build on what is being taught at school in the home by reviewing the privacy and safety features with their teen/young adult and the reasons they are necessary. Review how they can spot harmful content when they see it, report it, and fully understand the app’s community guidelines.

If you feel the user may not be ready to access character-based AI, use parental controls on their devices, in the app store, and on the internet network to restrict character-based AI access. Just like other social media platforms, there are published guidelines for using character-based AI: don’t upload images of themselves, friends, or family members or recordings of their voices.

As teens become more active with AI and character-based AI, set time limits for its use and where they can use character-based AI (i.e., at the kitchen table), and how they will use it by identifying what function will it serve for them (i.e., practice conversational foreign language). Explain why the boundaries are there and how you want to help them make good decisions about AI usage in general, as it is and will continue to be all around them now and as they move on in life, where they will be making these decisions themselves. Set a good foundation early on.

References

- The Data Scientist

- New York Times

- New York Times Opinion

About the Author

NESCA’s Director of Consultation and Psychoeducational Services Dot Lucci has been active in the fields of education, psychology, research and academia for over 30 years. She is a national consultant and speaker on program design and the inclusion of children and adolescents with special needs, especially those diagnosed with Autism Spectrum Disorder (ASD). Prior to joining NESCA, Ms. Lucci was the Principal of the Partners Program/EDCO Collaborative and previously the Program Director and Director of Consultation at MGH/Aspire for 13 years, where she built child, teen and young adult programs and established the 3-Ss (self-awareness, social competency and stress management) as the programming backbone. She also served as director of the Autism Support Center. Ms. Lucci was previously an elementary classroom teacher, special educator, researcher, school psychologist, college professor and director of public schools, a private special education school and an education collaborative.

research and academia for over 30 years. She is a national consultant and speaker on program design and the inclusion of children and adolescents with special needs, especially those diagnosed with Autism Spectrum Disorder (ASD). Prior to joining NESCA, Ms. Lucci was the Principal of the Partners Program/EDCO Collaborative and previously the Program Director and Director of Consultation at MGH/Aspire for 13 years, where she built child, teen and young adult programs and established the 3-Ss (self-awareness, social competency and stress management) as the programming backbone. She also served as director of the Autism Support Center. Ms. Lucci was previously an elementary classroom teacher, special educator, researcher, school psychologist, college professor and director of public schools, a private special education school and an education collaborative.

Ms. Lucci directs NESCA’s consultation services to public and private schools, colleges and universities, businesses and community agencies. She also provides psychoeducational counseling directly to students and parents. Ms. Lucci’s clinical interests include mind-body practices, positive psychology, and the use of technology and biofeedback devices in the instruction of social and emotional learning, especially as they apply to neurodiverse individuals.

To book a consultation with Ms. Lucci or one of our many expert clinicians, complete NESCA’s online intake form. Indicate whether you are seeking an “evaluation” or “consultation” and your preferred clinician/consultant/service in the referral line.

NESCA is a pediatric neuropsychology practice and integrative treatment center with offices in Newton, Plainville, and Hingham, Massachusetts; Londonderry, New Hampshire; the greater Burlington, Vermont region; and Brooklyn, NY, serving clients from infancy through young adulthood and their families. For more information, please email info@nesca-newton.com or call 617-658-9800.

attention deficit disorders, communication disorders, intellectual disabilities, and learning disabilities. She particularly enjoys working with children and their families who have concerns regarding an autism spectrum disorder. Dr. Milana has received specialized training on the administration of the Autism Diagnostic Observation Schedule (ADOS).

attention deficit disorders, communication disorders, intellectual disabilities, and learning disabilities. She particularly enjoys working with children and their families who have concerns regarding an autism spectrum disorder. Dr. Milana has received specialized training on the administration of the Autism Diagnostic Observation Schedule (ADOS).

practicing neuropsychology for 35 years and has been director of NESCA’s Neuropsychology practice for nearly three decades, continuously training and mentoring neuropsychologists to meet the highest professional standards.

practicing neuropsychology for 35 years and has been director of NESCA’s Neuropsychology practice for nearly three decades, continuously training and mentoring neuropsychologists to meet the highest professional standards.

families better understand their child’s unique neurocognitive, developmental, learning, and social-emotional profiles. She specializes in the assessment of toddlers, school-aged children, adolescents, and young adults. Her expertise involves working with youth exhibiting a diverse range of clinical presentations, including neurodevelopmental disorders, such as autism spectrum disorder, attention and executive functioning deficits, learning disabilities, developmental delays, intellectual disabilities, and associated emotional challenges. Dr. Manning is also trained in the assessment of children with medical complexities, recognizing how health conditions can impact a child’s development and functioning. She partners closely with families to develop practical, personalized recommendations that support each individual’s success and growth at home, in school, and within the community.

families better understand their child’s unique neurocognitive, developmental, learning, and social-emotional profiles. She specializes in the assessment of toddlers, school-aged children, adolescents, and young adults. Her expertise involves working with youth exhibiting a diverse range of clinical presentations, including neurodevelopmental disorders, such as autism spectrum disorder, attention and executive functioning deficits, learning disabilities, developmental delays, intellectual disabilities, and associated emotional challenges. Dr. Manning is also trained in the assessment of children with medical complexities, recognizing how health conditions can impact a child’s development and functioning. She partners closely with families to develop practical, personalized recommendations that support each individual’s success and growth at home, in school, and within the community.

from elementary school through young adulthood. In addition to direct client work, Ms. Badamo provides consultation and support to parents and families in order to help change dynamics within the household and/or support the special education processes for students struggling with executive dysfunction. She also provides expert consultation to educators, special educators and related professionals.

from elementary school through young adulthood. In addition to direct client work, Ms. Badamo provides consultation and support to parents and families in order to help change dynamics within the household and/or support the special education processes for students struggling with executive dysfunction. She also provides expert consultation to educators, special educators and related professionals.

and young adults who have complex presentations with a wide range of concerns, including attention deficit disorders, psychiatric disorders, intellectual disabilities, and autism spectrum disorders (ASD). She also values collaboration with families and outside providers to facilitate supports and services that are tailored to each child’s specific needs.

and young adults who have complex presentations with a wide range of concerns, including attention deficit disorders, psychiatric disorders, intellectual disabilities, and autism spectrum disorders (ASD). She also values collaboration with families and outside providers to facilitate supports and services that are tailored to each child’s specific needs.

is interested in uncovering an individual’s unique pattern of strengths and weaknesses to best formulate a plan for intervention and success. She tailors each assessment to address a range of referral questions, such as developmental disabilities, including Autism Spectrum Disorder, learning disabilities, attention challenges, executive functioning deficits, and social-emotional struggles. She also evaluates college-/grad school-age individuals with developmental issues, such as ASD and ADHD, particularly when there is a diagnostic clarity or accommodation question.

is interested in uncovering an individual’s unique pattern of strengths and weaknesses to best formulate a plan for intervention and success. She tailors each assessment to address a range of referral questions, such as developmental disabilities, including Autism Spectrum Disorder, learning disabilities, attention challenges, executive functioning deficits, and social-emotional struggles. She also evaluates college-/grad school-age individuals with developmental issues, such as ASD and ADHD, particularly when there is a diagnostic clarity or accommodation question.

Boston area since 2006. He specializes in the assessment of children and adolescents who present with a wide range of developmental conditions, such as Attention-Deficit/Hyperactivity Disorder, Specific Learning Disorder (reading, writing, math), Intellectual Disability, and Autism Spectrum Disorder; as well as children whose cognitive functioning has been impacted by medical, psychiatric, and genetic conditions. He also has extensive experience working with children who were adopted both domestically and internationally.

Boston area since 2006. He specializes in the assessment of children and adolescents who present with a wide range of developmental conditions, such as Attention-Deficit/Hyperactivity Disorder, Specific Learning Disorder (reading, writing, math), Intellectual Disability, and Autism Spectrum Disorder; as well as children whose cognitive functioning has been impacted by medical, psychiatric, and genetic conditions. He also has extensive experience working with children who were adopted both domestically and internationally.

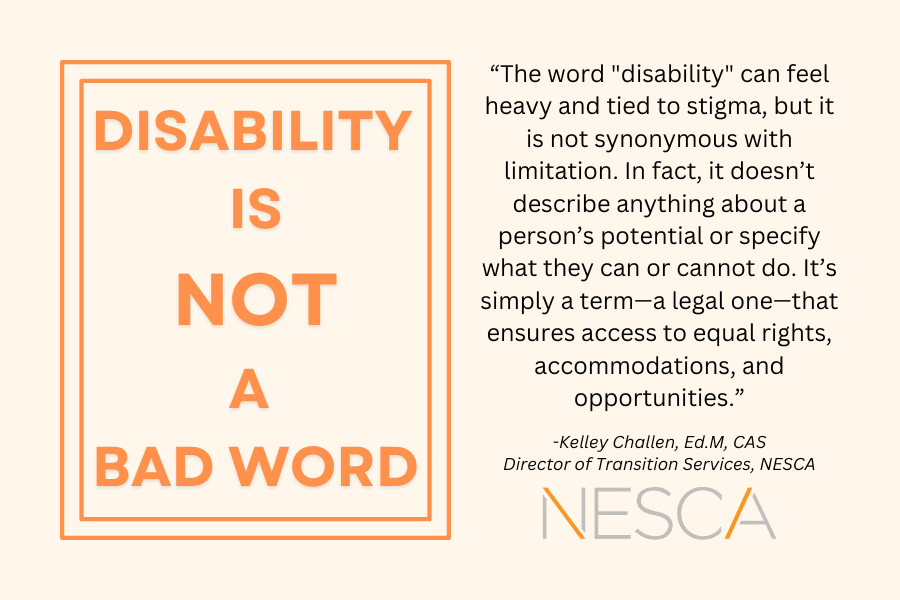

young adults with diverse developmental and learning abilities. Since 2013, she has served as Director of Transition Services at NESCA, offering individualized transition assessments, planning, consultation, coaching, and program development. She specializes in working with students with complex profiles who may not engage with traditional testing tools or programs. Ms. Challen holds a BA in Psychology and a Minor in Hispanic Studies from The College of William and Mary, along with a Master’s and Certificate of Advanced Graduate Study in Risk and Prevention Counseling from the Harvard Graduate School of Education. She is a member of CEC, DCDT, and COPAA, believing it’s vital for all IEP participants to have accurate information about transition planning. Ms. Challen has also been actively involved in the MA DESE IEP Improvement Project, mentored candidates in UMass Boston’s Transition Leadership Program, and co-authored a chapter in Technology Tools for Students with Autism.

young adults with diverse developmental and learning abilities. Since 2013, she has served as Director of Transition Services at NESCA, offering individualized transition assessments, planning, consultation, coaching, and program development. She specializes in working with students with complex profiles who may not engage with traditional testing tools or programs. Ms. Challen holds a BA in Psychology and a Minor in Hispanic Studies from The College of William and Mary, along with a Master’s and Certificate of Advanced Graduate Study in Risk and Prevention Counseling from the Harvard Graduate School of Education. She is a member of CEC, DCDT, and COPAA, believing it’s vital for all IEP participants to have accurate information about transition planning. Ms. Challen has also been actively involved in the MA DESE IEP Improvement Project, mentored candidates in UMass Boston’s Transition Leadership Program, and co-authored a chapter in Technology Tools for Students with Autism.

Connect with Us